Multus CNI

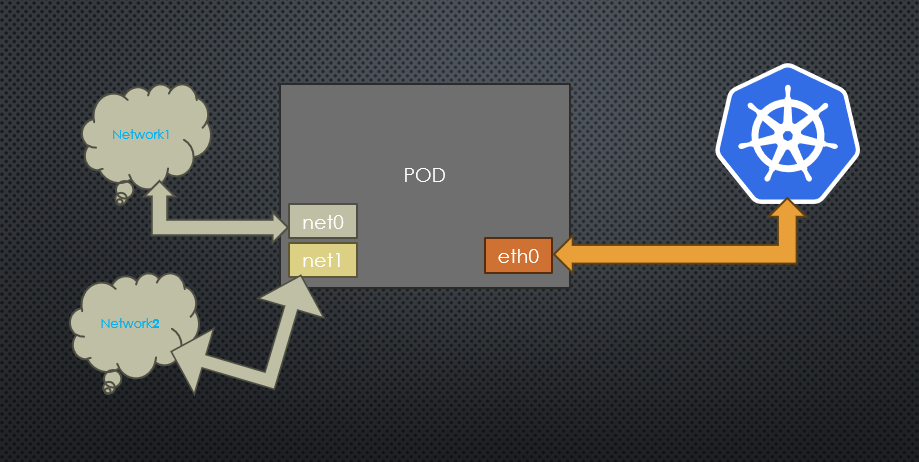

- By default, Kubernetes supports a single network interface! For some scenario, we need to implement two or three interfaces in the PODs, so that we can connect to different backend services.

- Multus is an open-source CNI (Container Network Interface) plug-in for Kubernetes that lets you attach multiple network interfaces to a single pod and associate each with a different address range with the help of CRD objects in Kubernetes. Thus, Multus Containers manages the multiple container network interfaces in Kubernetes.

- Moreover, it allows to attach DPDK/SRIOV interfaces to pods to support network intensive workloads.

- Multus is dependent on other types of network plugins, such as Flannel, Calico, Cilium and Weave.

- Role of Multus CNI:

- In enabling multi-interface support by serving as a supplementary layer in a container network.

- It attaches multiple network interfaces to pods in Kubernetes.

- Multus CNI work as an intermediate between container runtime and plugins.

We we know that POD has only single interface eth0 connects Kubernetes cluster network to connect with Kubernetes server/services (e.g. kubernetes api-server, kubelet and so on).

net0 is additional network attachments and connect to other networks 1 by using other CNI plugins (e.g. vlan/vxlan/ptp).

net1 is another additional network attachments and connect to other networks 2 by using other CNI plugins

Before the installation of Multus, one can check that there is no network-attachment-definitions resource information is available to Kubernetes cluster.

[root@master1 ~]# kubectl get network-attachment-definitions.k8s.cni.cncf.io

error: the server doesn't have a resource type "network-attachment-definitions"

We, can install the Multus quickly, by using below command.

[root@master1 ~]# kubectl apply -f https://raw.githubusercontent.com/k8snetworkplumbingwg/multus-cni/master/deployments/multus-daemonset-thick.yml

customresourcedefinition.apiextensions.k8s.io/network-attachment-definitions.k8s.cni.cncf.io created

clusterrole.rbac.authorization.k8s.io/multus created

clusterrolebinding.rbac.authorization.k8s.io/multus created

serviceaccount/multus created

configmap/multus-daemon-config created

daemonset.apps/kube-multus-ds created

[root@master1 net.d]# kubectl get pods -A| grep -i multus

kube-system kube-multus-ds-28dg4 1/1 Running 0 2m43s

kube-system kube-multus-ds-hbddb 1/1 Running 0 2m43s

kube-system kube-multus-ds-tnvgf 1/1 Running 0 2m43s

[root@master1 net.d]# kubectl get network-attachment-definitions.k8s.cni.cncf.io

No resources found in default namespace.

From the above output, it is clear that our Kubernetes cluster knows about the network-attachment-definitions object.

[root@master1 net.d]# cd /etc/cni/net.d/[root@master1 net.d]# cat 00-multus.conf | jq .

{

"capabilities": {

"bandwidth": true,

"portMappings": true

},

"cniVersion": "0.3.1", #Tells each CNI plugin which version is being used

"logLevel": "verbose",

"logToStderr": true,

"name": "multus-cni-network",

"clusterNetwork": "/host/etc/cni/net.d/10-calico.conflist",

"type": "multus-shim" # This tells CNI which binary to call on disk.

}

[root@master1 net.d]#

As we know that every VM has different interfaces and gateway. Thus, we need to create one configuration file that contain the correct information. For that, first we need to identify the correct interface and gateway.

[root@master1 ~]# ifconfig

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:4f:74:7d:a6 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

enp0s3: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.1.31 netmask 255.255.255.0 broadcast 192.168.1.255

inet6 fe80::a00:27ff:fe22:8301 prefixlen 64 scopeid 0x20<link>

inet6 2401:4900:1c0a:4e0d:a00:27ff:fe22:8301 prefixlen 64 scopeid 0x0<global>

ether 08:00:27:22:83:01 txqueuelen 1000 (Ethernet)

RX packets 110855 bytes 16897880 (16.1 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 131686 bytes 82851882 (79.0 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 2456806 bytes 463770856 (442.2 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2456806 bytes 463770856 (442.2 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

tunl0: flags=193<UP,RUNNING,NOARP> mtu 1480

inet 172.16.68.0 netmask 255.255.255.255

tunnel txqueuelen 1000 (IPIP Tunnel)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@master1 ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.1.1 0.0.0.0 UG 100 0 0 enp0s3

172.16.14.64 192.168.1.33 255.255.255.192 UG 0 0 0 tunl0

172.16.68.0 0.0.0.0 255.255.255.192 U 0 0 0 *

172.16.133.128 192.168.1.32 255.255.255.192 UG 0 0 0 tunl0

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

192.168.1.0 0.0.0.0 255.255.255.0 U 100 0 0 enp0s3

[root@master1 ~]#

Now, we knows all the details, thus we can create our own NetworkAttachmentDefination file.

cat <<EOF | kubectl create -f -

apiVersion: "k8s.cni.cncf.io/v1"

kind: NetworkAttachmentDefinition

metadata:

name: macvlan-conf

spec:

config: '{

"cniVersion": "0.3.1",

"type": "macvlan",

"master": "enp0s3",

"mode": "bridge",

"ipam": {

"type": "host-local",

"subnet": "192.168.1.0/24",

"rangeStart": "192.168.1.200",

"rangeEnd": "192.168.1.216",

"routes": [

{ "dst": "0.0.0.0/0" }

],

"gateway": "192.168.1.1"

}

}'

EOF

Post check of Multus CNI :

[root@master1 ~]# kubectl get network-attachment-definitions.k8s.cni.cncf.io macvlan-conf

NAME AGE

macvlan-conf 56s

[root@master1 ~]# kubectl describe network-attachment-definitions.k8s.cni.cncf.io macvlan-conf

Name: macvlan-conf

Namespace: default

Labels: <none>

Annotations: <none>

API Version: k8s.cni.cncf.io/v1

Kind: NetworkAttachmentDefinition

Metadata:

Creation Timestamp: 2024-05-12T07:25:32Z

Generation: 1

Managed Fields:

API Version: k8s.cni.cncf.io/v1

Fields Type: FieldsV1

fieldsV1:

f:spec:

.:

f:config:

Manager: kubectl-create

Operation: Update

Time: 2024-05-12T07:25:32Z

Resource Version: 252464

UID: 9e259bce-de62-4342-a13c-44807dc6113c

Spec:

Config: { "cniVersion": "0.3.0", "type": "macvlan", "master": "enp0s3", "mode": "bridge", "ipam": { "type": "host-local", "subnet": "192.168.1.0/24", "rangeStart": "192.168.1.200", "rangeEnd": "192.168.1.216", "routes": [ { "dst": "0.0.0.0/0" } ], "gateway": "192.168.1.1" } }

Events: <none>

Pod configuration for Multus

cat <<EOF | kubectl create -f -

apiVersion: v1

kind: Pod

metadata:

name: samplepod

annotations:

k8s.v1.cni.cncf.io/networks: macvlan-conf

spec:

containers:

- name: samplepod

command: ["/bin/ash", "-c", "trap : TERM INT; sleep infinity & wait"]

image: alpine

EOF

Let's check our newly created pod.

[root@master1 ~]# kubectl get pods samplepod

NAME READY STATUS RESTARTS AGE

samplepod 1/1 Running 0 28s

It is in running condition. Now, we can identify the interfaces.

[root@master1 ~]# kubectl exec -it samplepod -- /bin/sh

/ # ifconfig

eth0 Link encap:Ethernet HWaddr A6:56:BC:E1:26:AC

inet addr:172.16.14.81 Bcast:0.0.0.0 Mask:255.255.255.255

inet6 addr: fe80::a456:bcff:fee1:26ac/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1480 Metric:1

RX packets:13 errors:0 dropped:0 overruns:0 frame:0

TX packets:9 errors:0 dropped:1 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:1912 (1.8 KiB) TX bytes:726 (726.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

net1 Link encap:Ethernet HWaddr 6E:7B:C9:22:6F:B0

inet addr:192.168.1.200 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::6c7b:c9ff:fe22:6fb0/64 Scope:Link

inet6 addr: 2401:4900:1c0a:4e0d:6c7b:c9ff:fe22:6fb0/64 Scope:Global

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:23 errors:0 dropped:0 overruns:0 frame:0

TX packets:19 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:1704 (1.6 KiB) TX bytes:1554 (1.5 KiB)

/ #

Pod configuration for 2 Interfaces

cat <<EOF | kubectl create -f -

apiVersion: v1

kind: Pod

metadata:

name: samplepod2

annotations:

k8s.v1.cni.cncf.io/networks: macvlan-conf,macvlan-conf

spec:

containers:

- name: samplepod

command: ["/bin/ash", "-c", "trap : TERM INT; sleep infinity & wait"]

image: alpine

EOF

[root@master1 ~]# kubectl exec pods/samplepod2 -- ifconfig

eth0 Link encap:Ethernet HWaddr EA:08:99:94:CD:45

inet addr:172.16.14.100 Bcast:0.0.0.0 Mask:255.255.255.255

inet6 addr: fe80::e808:99ff:fe94:cd45/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1480 Metric:1

RX packets:13 errors:0 dropped:0 overruns:0 frame:0

TX packets:8 errors:0 dropped:1 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:1912 (1.8 KiB) TX bytes:656 (656.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

net1 Link encap:Ethernet HWaddr 0A:4F:D5:FB:85:9F

inet addr:192.168.1.201 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::84f:d5ff:fefb:859f/64 Scope:Link

inet6 addr: 2401:4900:1c0a:4e0d:84f:d5ff:fefb:859f/64 Scope:Global

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:15 errors:0 dropped:0 overruns:0 frame:0

TX packets:19 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:1196 (1.1 KiB) TX bytes:1506 (1.4 KiB)

net2 Link encap:Ethernet HWaddr 2E:0D:1A:4E:15:59

inet addr:192.168.1.202 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: 2401:4900:1c0a:4e0d:2c0d:1aff:fe4e:1559/64 Scope:Global

inet6 addr: fe80::2c0d:1aff:fe4e:1559/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:14 errors:0 dropped:0 overruns:0 frame:0

TX packets:18 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:1140 (1.1 KiB) TX bytes:1512 (1.4 KiB)